Best Encog Neural Network structure (activation, bias)

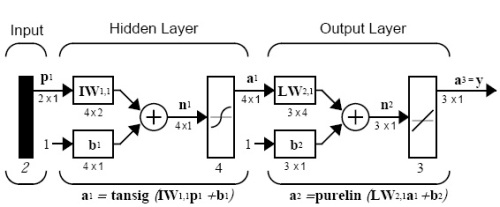

In neural network training one of the most difficult problems is to find the best network structure. This includes the optimal number of layers, the number of neurons in each layer, bias per layer and the activation functions. These are usually determined by trial & error. We do the same here by perturbing the activation functions and the bias in a standard 3 layer Feedforward Neural Network.

As the activation function, we cannot use the sigmoid function, because it is limited only to the 0..1 output range. A commonly used function is the hyperbolic tangent. Its derivative is easy to compute, which come in handy in the training algorithm.

We target 3 layer networks. The number of neurons in the input and output layers are fixed to our problem. As we work with the daily currentChangePercent as input and target nextChangePercent as output, the numbers of our input and output neurons are fixed: 1 and 1. (Note: we do regression and not classification, so our output is also 1 dimensional. In classification, we can have multidimensional outputs easily.) We have some freedom to choose the number of neurons for the middle layer.

For this study, it will be also fixed: 2.

Other parameters of the algorithm:

int lookbackWindowSize = 200;

double outlierThreshold = 0.04;

int nNeurons = 2;

int maxEpoch = 20;

int[] nEnsembleMembers = new int[] { 11 };

We have the freedom to choose the activation functions and the bias in each layer. We experiment with 3 different NN structures. 3 different activation function choice:

1. Encog FeedForwardPattern(); (Tanh, Tanh, Tanh) layers, bias in input layer

2. Matlab emulation; (Lin, Tanh, Lin) layers, no bias in input layer

3. Jeff network (Tanh, Tanh, Lin) layers, no bias in input layer

For robustness, we also vary these normalization boost parameters as 0.1, 1, 10 and 100:

double inputNormalizationBoost = 0.1;

double outputNormalizationBoost = 0.1;

The code that handles this:

if (p_nnStructure == 1)

{

// most popularly used in Encog examples

// the default Generate() gives:

// 3 layers has TanH() activation (stupid), only the middle or maybe the output layer should;

// and the last layer has no bias, but the first and second have

// the middle layer has 2 biases (the biasWeight is 2 dimensional)

// the biasWeights are initilazed randomly

FeedForwardPattern pattern = new FeedForwardPattern();

pattern.InputNeurons = 1;

pattern.OutputNeurons = 1;

pattern.ActivationFunction = new ActivationTANH(); // the Sigmoid cannot represent negative values

pattern.AddHiddenLayer(p_nNeurons);

network = pattern.Generate();

}

else if (p_nnStructure == 2)

{

// version 2. Matlab emulation;

// consider the newFF() in Matlab:

// 1. The input is not a layer; no activation function, no bias

// 2. The middle layer has a bias, and tansig transfer function

// 3. The output is a layer; having a bias (we checked); but has Linear activation (in the default case); in the Matlab book, there are examples with tansig ouptput layers too

network = new BasicNetwork();

network.AddLayer(new BasicLayer(new ActivationLinear(), false, 1));

network.AddLayer(new BasicLayer(new ActivationTANH(), true, p_nNeurons));

network.AddLayer(new BasicLayer(new ActivationLinear(), true, 1));

network.Structure.FinalizeStructure();

}

else if (p_nnStructure == 3)

{

// Jeff use it

// “ I’ve been using a linear activation function on the output layer, and sigmoid or htan on the input and hidden lately for my prediction nets, and getting lower error rates than a uniform activation function. “ (uniform: using the same on every layer)

network = new BasicNetwork();

network.AddLayer(new BasicLayer(new ActivationTANH(), false, 1));

network.AddLayer(new BasicLayer(new ActivationTANH(), true, p_nNeurons));

network.AddLayer(new BasicLayer(new ActivationLinear(), true, 1));

network.Structure.FinalizeStructure();

}

In the previous blog we mentioned these differences between Matlab and Encog:

-Encog default 3 layer Backprog network has extra bias/and neurons. It differs from Matlab. More specifically:

A.

The newFF() in Matlab network is:

1. The input is not a layer; no activation function, no bias. It has only 2 layers (the middle and the output)

2. The middle layer has a bias, and tansig transfer function

3. The output is a layer; having a bias (we checked); but it has Linear activation (in the default case); in the Matlab book, there are examples with tansig output layers too

B.

The default FeedForwardPattern().Generate() in Encog gives:

1. 3 layers has TanH() activation. We found it weird. Only the middle or maybe the output layer should have activation functions.

2. the last layer has no bias, but the first and second have

3. the middle layer has 2 biases (the biasWeight is 2 dimensional) in case of 2 neurons case

4. the biasWeights are initilazed randomly; Correct. That is expected.

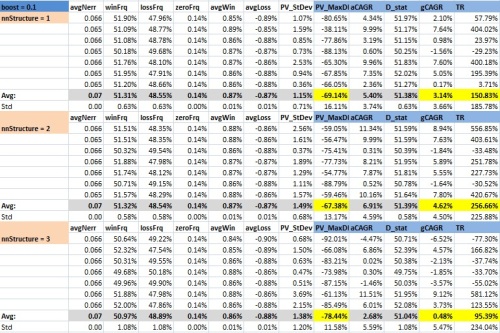

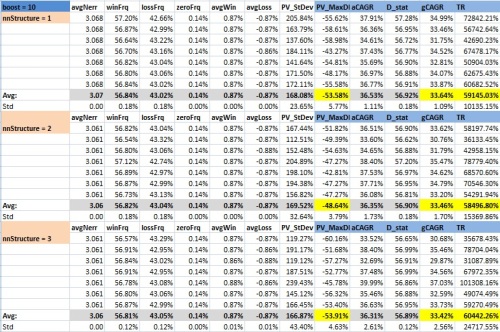

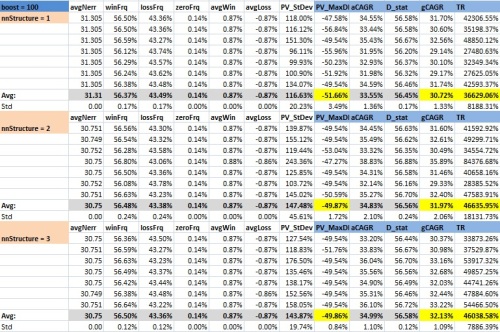

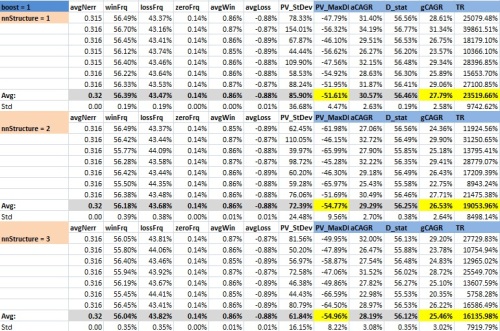

Here are the measured results. Note we also measured the average network training error (avgNerr column), but there was no significant difference between the different NN structures.

Take the average gCAGR (Geometric Commulative Annual Growth Rate) as a performance measurement. But the TR (Total Return) can be used as well.

Here are our measurements: (click them for a non-blurry, non-scaled version)

For normalizationBooster = 0.1:

For normalizationBooster = 10:

For normalizationBooster = 100:

Conclusions:

– we made 4 tests for robustness (for different normalization boost levels).

– Using the average gCAGR values we can say the nnStructure1 was a winner 2 times, nStructure2 was winner 1 times and nStructure3 was winner 1 times.

– Using the average TR as a performance measure, nnStructure1 was a winner 1 times, nStructure2 was winner 2 times and nStructure3 was winner 1 times.

– if we take into account the SD (standard deviations), the decision boundary is even more blurry

– we conclude that nor gCAGR neither TR and not even PvMaxDD can differentiate between the 3 network structure

– using the avgNetworkTrainingError also cannot differentiate between the network structures

– overall, it doesn’t matter which structure we use. They are almost equivalent. So, this study has not too much result. We wanted to find the best NN structure and we cannot find it, because all of them are equally good.

– However, as a decision has to be made, we prefer structure 2, because linear input and output layers makes most sense to us, and assures simplicity. As Einstein said: Make everything as simple as possible, but not simpler. (Also Occam’s razor: a principle that generally recommends selecting the competing hypothesis that makes the fewest new assumptions, when the hypotheses are equal in other respects.) Also we like to omit using bias in the input layer.

– also, I am not sure Encog uses the activation function of the first layer, so it doesn’t matter which we choose. (We made a separate study here in the postscript of this blog post. Geeks should see that too).

– note a fallout of this study: the normalization boost parameter optimal value is around 10. This tells us 2 things.

1. The optimal boost is bigger than 1. It tells that max and min input values shouldn’t be crammed exactly into the range of -1..+1. So, this regression task prefers not to use the usual minMax normalization (when the min value is mapped to -1 and max value is mapped to +1). This problem prefers to use the stdDev kind of normalization, when the value 1 standard deviation away from the mean is mapped to -1 and +1. So, values outside of the SD can be mapped to larger values than +1.

2. The optimal boost is lower than 100. So after a while, numerical problems occur. The NN preferred output range is -1..+1 and not -100..+100. After extending too much, it cannot forecast precisely into that output range. A solution is that we may increase the input boost, but keep the output boost small. (but that is another study)

– as we see now that the normalization boost = 10 is better than the boost = 100, we are happy to conclude that we got even better results than in the last blog post. In the last blog post we only tested boost = 1 and boost = 100.

– These better result achieved by boost = 10 is:

The average TR= 60,000% (Total Return). (It multiplied your initial 1 dollar by 600! In 23 years). That is about 33.4% CAGR (per year). The directional accuracy is 56.9%. We conclude again that, in such a long term period (23 years) like this, this is our best prediction; best result so far.

– Compare it to the Buy&Hold approach. Buy&hold multiplied the initial capital by 5 in 23 years (7.9% annual CAGR). Encog NN multiplied it by 600. That is 120 times more.

Next time, we will investigate a little money management technique to possible improving the profit potential. We will increase or decrease the position size, based on our conviction.

********************

********************

********************

********************

Bonus postscript for Encog programmers: (this chapter is only for geeks)

We wanted to check how the Activation function and bias for the input layer is used in Encog or not. Are they used at all? We traced the XOR example application that can be found among the Encog framework examples.

1. Forward propagation, the Compute()

In Compute(), the activation function of the first layer is never used.

public virtual void Compute(double[] input, double[] output)

{

int sourceIndex = this.layerOutput.Length

– this.layerCounts[this.layerCounts.Length – 1];

EngineArray.ArrayCopy(input, 0, this.layerOutput, sourceIndex,

this.inputCount);

for (int i = this.layerIndex.Length – 1; i > 0; i–) //process only 2 layers

{

ComputeLayer(i);

}

EngineArray.ArrayCopy(this.layerOutput, 0, output, 0, this.outputCount);

}

Note that with this instruction

EngineArray.ArrayCopy(input, 0, this.layerOutput, sourceIndex, this.inputCount);

the input is simply copied to the layerOutput; without using any activation function.

So, it says that the output (the target array is this.layerOutput) of the first layer equals exactly to the input of the first layer (without any modification, any weight usage, or activation function usage).

That may be a bug in Encog. That may be on purpose. (Probably it is the intent.)

For all other layers, the this.layerOutput array contains really the output. That means the layerOutput = activationFunction(Sum(weights*(input+bias of that layer))) of that layer. The input layer is the only exception. The input layer doesn’t exist.

Also, we noticed that the code

EngineArray.ArrayCopy(input, 0, this.layerOutput, sourceIndex, this.inputCount);

copies only the input values (=2 inputs in the XOR problem) irrelevant of the fact that we specified bias or not in the input layer. Therefore, even the bias of the input layer is never used in Computation().

2. Training

However, the derivative of the activation function of the first layer is used in Train() in

private void ProcessLevel(int currentLevel)

{

…

this.layerDelta[yi] = sum

* activation.DerivativeFunction(this.layerOutput[yi]);

}

We also made an experiment: replaced the derivative by Double.NaN.

public virtual double DerivativeFunction(double x)

{

//return (1.0d – x * x); // orig

return Double.NaN; // Agy

}

The result is that even if the derivative was called, the NaN was not propagated into the calculations, and the XOR example returned proper, flawless calculation. (as if those NaN values were not used) So, we are convinced that even if the derivative is called, its value is not used later. However, this means, that Encog does unnecessary calculations (not very efficient), and it also means that in spite of that we have to specify the input layer as a proper layer (in contrast to Matlab), and the input layer has bias, and activation function, they are not used. So Encog virtually has only 2 layers: middle layer and output layer (as Matlab has).

Therefore, because in Encog it is irrelevant what the activation function and bias are in the input layer, it is no wonder that our performance study found no difference between structure version 2 and version 3, because the difference between the 2 structures is that version 2 used linear activation, while version 3 used tanh activation in the input layer.

The disturbing fact is that the Encog programmer has to specify the activation and bias for the input layer, and the user believes he makes a cardinal decision which can greatly affect the output or the prediction power. However, his choice makes no difference.

We guess Encog cheat the programmer, because it simpler to implement the input as a general layer, however in practice, it is an empty layer. Beware of the delusions!

Filed under: Uncategorized | 1 Comment

One of the best analyses of NN application to a real world problem. I enjoy your blog very much. You give me a lot of ideas and clear up a lot of doubts. Good work and thanks.

Jack Sadowski