"Hand me a clamp, stat," a skinny guy in a white lab coat says with some urgency. An older man, who had been standing back from the messy melee, looks over the shoulder of the first man. "Quick, the blood's going everywhere," he says. He's holding a syringe full of bright blue liquid.

"OK, OK," says another white-coated man, hurrying off. He returns a few seconds later. "Got the clamp. And some gauze as well."

I stand in the corner of the room for a few minutes and watch the men operate on the patient. Eventually, when everything seems to have stabilised, I cautiously take a step towards the workbench and assess the damage. "Is it usually this messy?" I ask, looking down at a mound of sodden tissues, pipes, pumps, and syringes. The whole thing throbs gently, rhythmically, unnervingly.

"Yep, I'm afraid so. We still have a few bugs to iron out with the 5D electronic blood..."

* * *

-

Charged-up electrochemical fluid ("5D blood") goes in the blue pots, and then they flow around the system and into the red pots, providing power and cooling to the chip in the middle. The blood then need to be recharged.Sebastian Anthony

-

It's alliiiive!Sebastian Anthony

-

Or, well, actually it's a bit... dead.Sebastian Anthony

-

The complete test setup.Sebastian Anthony

-

This is kind of the precursor to IBM's 5D blood research: it's the water-cooled gallium-arsenide solar cell used in the Solar Sunflower.Sebastian Anthony

One of the greatest problems facing the intertwined fields of computer science, electronic engineering, and information technology is density. Simply put, it is very, very hard to cram more and more digital functionality (compute, RAM, NAND flash, etc.) into a given volume of space. This might sound somewhat counterintuitive, considering how small modern computer chips are, but just think about it for a moment. Your desktop PC case probably has a volume of 50 litres or more—but the CPU, GPU, RAM, and handful of other chips that actually constitute the computer probably account for less than 1 percent of that volume.

It isn't that chip designers and computer makers don't want to make better use of that empty space inside your computer, but currently it's technologically infeasible to do so. We can just about squeeze in two or three graphics cards by using liquid cooling and perhaps two high-end CPUs if you're feeling particularly sassy. Going beyond that is really difficult.

In recent years we've seen the rise of one method of increasing density: stacking one die or chip on top of another. Even there, though, it seems that chip companies are struggling to go beyond a two-high stack, with logic (CPU, GPU) at the bottom and memory on top, or perhaps four or eight stacks in the case of high-bandwidth memory (HBM). Despite fairly regular announcements from various research groups and semiconductor companies, and the maturation of 3D stacking (TSVs) and packaging technologies (PoP), multi-storey logic chips are rare beasts indeed.

In both cases—cramming more graphics cards into your PC case or making a skyscraper chip with a dozen logic dies in the same package—it is the same two problems that get in the way: heat dissipation and power delivery.

Let's tackle the tricky topic of thermal dissipation first. Over the years, you may have noticed that the max TDP (thermal design power) of a high-end CPU hasn't really changed. It hit around 130W with the Pentium D in the mid-2000s... and then stopped. There are a number of complex reasons for this, but there are two that are the most pertinent for this story.

First, as chips get smaller, there is less surface area that makes contact with the heatsink/water block/etc., which puts some fairly stringent limits on the absolute amount of thermal energy that can be dissipated by the chip. Second, as chips get smaller, hot spots—clusters of transistors that see more action than other parts of the chip—become denser and hotter. And because these hot spots are also getting physically smaller as transistors get smaller, they fall afoul of the first issue as well. The smaller the hot spot, the harder it is to ferry the heat away.

Now imagine if you have two CPUs stacked on top of each other. With consumer-grade air or liquid cooling, we could probably prevent the top CPU from melting; but how on earth do you dissipate the heat produced by the lower CPU? And what happens if you stack hot spots on top of each other?

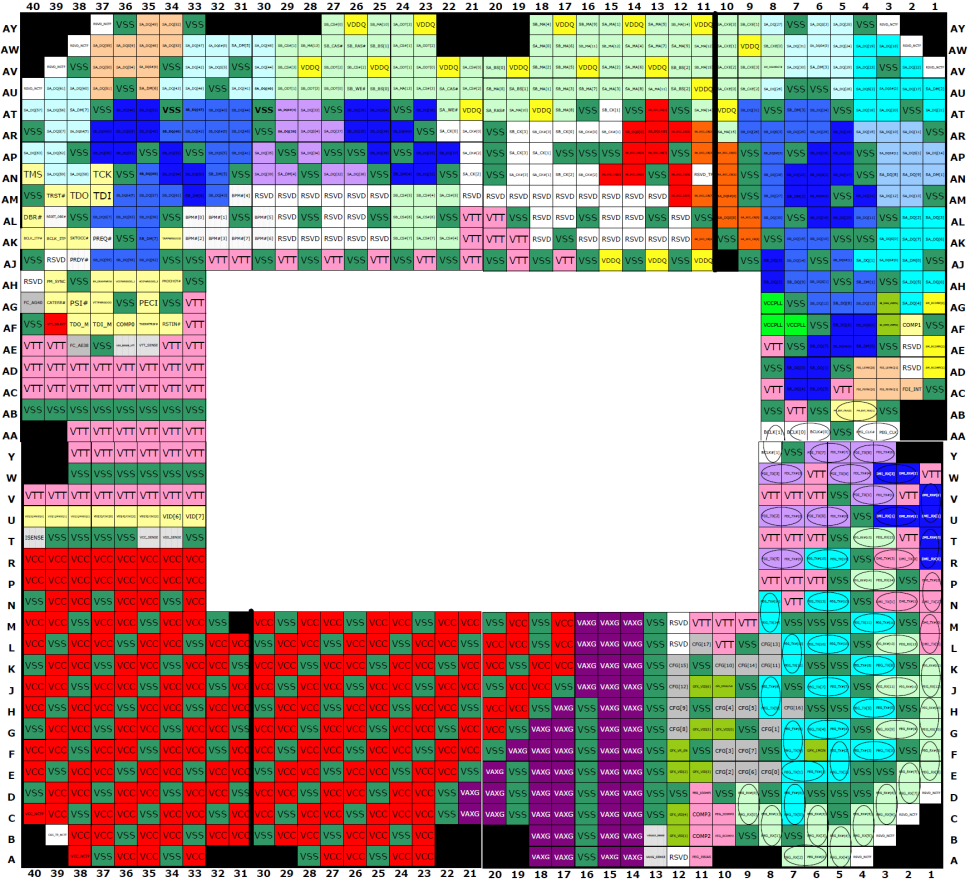

The other, lesser-known issue that prevents chip stacking is power delivery. Did you know that, on a modern CPU—an LGA 1155 Ivy Bridge chip, for example—the majority of those 1155 pins are used for power delivery? Take a look at the diagram above. All of the boxes that begin with "V"—VSS, VCC—are involved with delivering a stable flow of electricity to Ivy Bridge's 640 million transistors and other features.

If you want to stack one CPU on top of another, you not only have to increase the number of pins at the bottom of the chip, but you also have to find a way of connecting those power lines to the upper chip through the lower chip. This is incredibly difficult, and it's one of the main reasons that most 3D chip stacks, either via package-on-package (PoP) or TSV, have so far consisted of a CPU at the bottom and RAM on top. RAM is pretty much the opposite of a CPU, with most pins dedicated to data transfer rather than power delivery.

reader comments

76